- The Daily AI Show Newsletter

- Posts

- The Daily AI Show: Issue #80

The Daily AI Show: Issue #80

More benchmarks than we can shake a stick at

Welcome to Issue #80

Coming Up:

Why Shipmas Helps Some AI Companies and Hurts Others

AI Is Taking Over Operating Layers in the Physical World

The Coming Decision About AI Teaching Itself

What Anthropic’s Interviewer Study Gets Right About AI at Work

Plus, we discuss new AI model fatigue, if AI’s climate impact is actually positive, the messy middle conundrum, and all the news we found interesting this week.

It’s Sunday morning.

Before we jump in, we just wanted to say thank you to all of you who listen to our show on Spotify. As you can see, we grew quite a bit in 2025 and that is thanks to all of you.

So from all of the DAS crew, thank you!

Our Top AI Topics This Week

Why Shipmas Helps Some AI Companies and Hurts Others

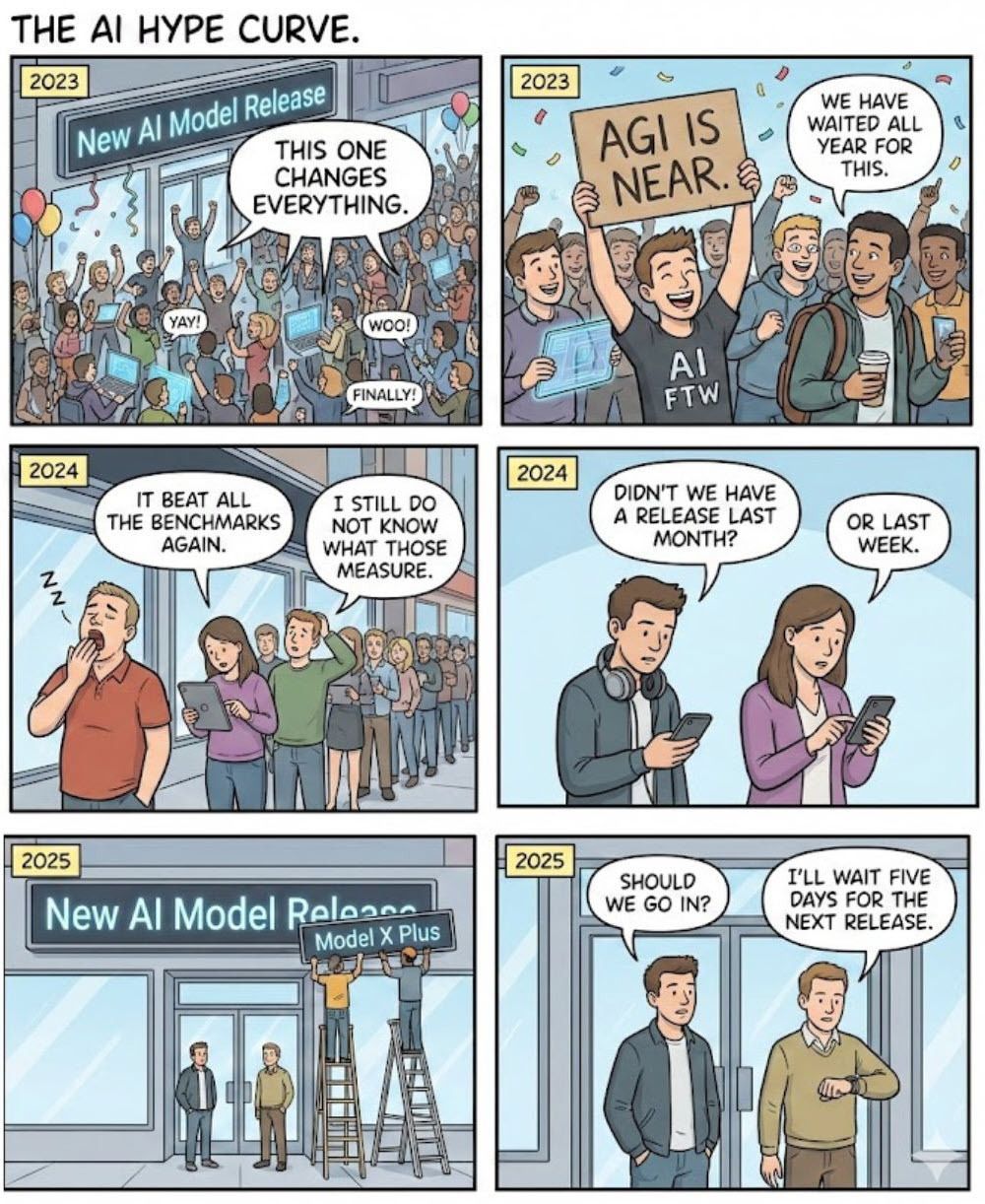

AI companies are starting to embrace multi-day launch event-weeks where they release something new each day. These events generate buzz fast, create momentum, and keep the company in the conversation. But they come with real downsides, especially when the market expects meaningful progress instead of small updates.

On the positive side, Shipmas-style product launches can help companies reframe their narrative. A week of daily drops makes the company look active, inventive, and confident. It gives users a steady stream of new features to try. It also creates a cultural moment that gets amplified across social platforms. Smaller companies benefit even more because a well timed launch run can lift their visibility far beyond their usual reach.

The flip side is the cost of missing the mark. When a company is under pressure or seen as falling behind, a rapid fire launch event magnifies every weakness. If the releases feel minor or rushed, the entire event backfires. Instead of signaling strength, it signals struggle. Users begin to treat the daily drops as filler. The gap between hype and delivery becomes the story.

There is also operational strain. Engineering, security, support, and marketing teams must deliver at a relentless pace during a tight calendar window. If a company is already fighting stability issues or user churn, Shipmas becomes a precarious distraction. It trades long term product depth for short term spectacle.

The real question is timing. When a company is leading, Shipmas works. It reinforces momentum. When a company is behind, Shipmas exposes the gap. Daily releases can help, but only when the substance is strong enough to match the cadence.

AI Is Taking Over Operating Layers in the Physical World

AI is moving from analyzing data to directly controlling real world systems. Reinforcement learning models now stabilize plasma inside fusion reactors. Agents handle parts of refinery operations. Weather forecast models run on AI predictions instead of simulated physics. Materials labs let AI choose experiments and operate robotics. This shift raises a hard question. How much control should AI have over systems where mistakes can cause physical damage, environmental harm, or cascading failures?

The upside is clear. AI-driven control loops react faster than any human. They can track and titrate unstable processes, optimize energy output, explore new configurations, and run thousands of simulations before acting. Fusion research is a perfect example. RL controllers already shape and stabilize plasma in live reactors, something that used to require careful hand-tuned physics models. As these systems mature, they could accelerate breakthroughs in energy, materials, and climate science.

The risks are just as real. A control policy that works in a simulation does not always behave the same way in the physical world. A model that learns to maximize one objective can unintentionally push a real-world system into unsafe territory. And there is a transparency problem. These controllers do not always explain why they choose specific actions, which creates a governance gap when stakes are high.

There is also a political dimension. Whoever builds and controls these AI process-management systems effectively controls the engines of the future energy grid, industrial base, and scientific discovery pipelines. That raises questions about oversight, accountability, and who sets the objective functions these systems should optimize for.

The next phase of AI is not about chat interfaces. It is about operational control. Before we let AI run reactors, power grids, and automated labs at scale, we need clear principles. Safety layers that cannot be bypassed. Environmental safeguards. Transparent logs that support audit and investigation. Human override systems that do not rely on perfect human reaction time. And governance structures that recognize the power of these systems and keep that power aligned with public interest.

This shift will define the next decade. AI is becoming the operating system for the real physical world. The benefits are huge, but only if we build in the right controls from the start.

The Coming Decision About AI Teaching Itself

Anthropic’s chief scientist, Jared Kaplan, made one of the clearest and bluntest statements about the future of AI so far. He said we may be only a few years away from needing to make a decision about whether new AI should learn from AI. That single shift would accelerate model improvement far faster than human oversight can follow, and it raises a set of difficult tradeoffs that cannot be pushed off indefinitely. And it is happening in small ways in AI development already.

Kaplan’s view is direct. AI systems will be capable of most white collar work within two to three years. His example was simple. His six year old son will never outperform an AI on writing or math. That is not because humans are getting worse. It is because the slope of model improvement is rising faster than the increments of human expertise in those domains. If models start training on the output of even more advanced models, the slope steepens again.

The potential benefits are obvious. Faster biomedical research. Stronger cybersecurity. Accelerated scientific discovery. Massive productivity gains. More time for people to focus on higher-value work. But the risks grow in parallel. Once models begin improving themselves, human understanding of how they “think” and how they reached a decision becomes harder to trace. Alignment becomes more complex. Error correction becomes harder. And failure points become more opaque.

The deeper issue is not science fiction. It is governance. Who will decide whether self-improvement loops are allowed for AI? Who evaluates the safety of recursive training? Who sets the boundaries when economic incentives favor speed over caution? Every major lab knows these questions are coming, and the timelines are tightening.

This is the edge of the next phase of AI. The choice is not whether models get smarter, they will. The choice is whether we set rules before the acceleration kicks in, or try after it is already underway.

What Anthropic’s Interviewer Study Gets Right About AI at Work

Anthropic ran a large scale interview experiment with professionals across the workforce. The goal was simple, understand how people actually feel about AI in their real jobs, not in theory. The results highlight three patterns that matter for anyone building or deploying AI inside an organization.

The first insight is about human identity. People want AI to remove repetitive tasks, but they do not want AI taking the parts of their job that define who they are. Sales teams want help drafting emails, taking notes, and prepping meetings, but they want the human relationship to stay human. Designers want help with variations and cleanup, but they want the key creative choices to stay theirs. Scientists want an AI partner, not an AI researcher that replaces the intellectual work they value. This tension will shape adoption. Any AI rollout that ignores the issue of identity will fail.

The second insight is about trust built through supervision. Professionals are generally positive about AI, but they want to be in charge of it. They want oversight, steering, and the ability to correct the system. They do not want an autonomous tool that makes decisions without them. This pattern showed up across all groups. Scientists want oversight to avoid hallucinated conclusions. Creatives want oversight to avoid style drift. General workers want oversight to ensure quality and accuracy. The message is clear, people want to manage the AI, not be managed by it.

The third insight is about rising expectations. As more people use AI, they expect these systems to feel like competent teammates. They want them to remember context, follow directions, and improve over time. They want less friction and fewer resets. This is important because it means adoption will accelerate for tools that feel reliable, and drop for tools that create extra work. Stability and reliability are becoming key features.

Anthropic’s interviewer study shows something simple but often overlooked in the rush toward AI-powered productivity gains: People do not want AI to replace their work. They want AI to clear the path so they can do the parts of their work that matter. Companies that design AI systems around that principle will scale adoption much faster than companies that push automation without understanding the human side.

Just Jokes

AI For Good

A new study from the University of Waterloo found that AI’s overall environmental impact footprint is smaller than many people think. The research team looked at the full lifecycle of AI systems, including training, hardware manufacturing, energy use, and model deployment. Their conclusion was that AI currently accounts for a very small portion of global electricity demand and that its growth is not on track to overwhelm energy grids.

The study also points out that AI is helping accelerate progress in climate tech. Examples include optimizing renewable energy production, improving grid efficiency, and reducing wasted power in heavy industry. The researchers argue that AI’s positive climate impact may outweigh its footprint if development continues to prioritize efficient models and greener infrastructure. This gives policymakers and companies a clearer foundation to design climate strategies that use AI as a tool rather than treating it as an environmental threat.

This Week’s Conundrum

A difficult problem or question that doesn't have a clear or easy solution.

The Messy Middle Conundrum

For all of human history, "competence" required struggle. To become a writer, you had to write bad drafts. To become a coder, you had to spend hours debugging. To become an architect, you had to draw by hand. The struggle was where the skill was built. It was the friction that forged resilience and deep understanding. AI removes the friction. It can write the code, draft the contract, and design the building instantly. We are moving toward a world of "outcome maximization," where the result is all that matters, and the process is automated. This creates a crisis of capability. If we no longer need to struggle to get the result, do we lose the capacity for deep thought? If an architect never draws a perspective line, do they truly understand space? If a writer never struggles with a sentence, do they understand the soul of the story? We face a future where we have perfect outputs, but the humans operating the machines are intellectually atrophied.

The conundrum:

Do we fully embrace the efficiency of AI to eliminate the drudgery of "process work," freeing us to focus solely on ideas and results, or do we artificially manufacture struggle and force humans to do things the "hard way" just to preserve the depth of human skill and resilience?

Want to go deeper on this conundrum?

Listen to our AI hosted episode

Did You Miss A Show Last Week?

Catch the full live episodes on YouTube or take us with you in podcast form on Apple Podcasts or Spotify.

News That Caught Our Eye

DeepSeek Releases Three New Reasoning Models

DeepSeek launched three new models over the weekend, including DeepSeek 3.2 and DeepSeek 3.2 Special. Both are reasoning focused models built specifically for agent use. Version 3.2 replaces the earlier experimental release and is now live across app, web, and API. The Special variant is API only.

Deeper Insight:

Chinese open source models continue to close the gap with frontier systems. Their performance is now strong enough for most real world agent work, and the open weights approach removes ongoing licensing costs for startups and builders.

Perplexity Adds AI Assistants With Memory

Perplexity rolled out memory for its AI assistants. The system stores user specific information that can be applied across different models inside Perplexity, including Gemini, Claude, and GPT variants. The goal is to deliver more relevant suggestions in shopping, travel, and everyday search tasks.

Deeper Insight:

Memory becomes more valuable when it sits above the model layer instead of inside a single model. This lets users mix models without losing personalization, which is a strategic advantage for platforms built around orchestration rather than a single model. Among the most well-known AI services, Perplexity may be in a unique position to offer this above-model personalized context-memory for application to AI assistants.

Suno Partners With Warner Music Group

Suno announced a partnership with Warner Music Group to support licensed music creation inside the platform. The agreement will introduce new tools for fans to interact with artists, enable collaborative AI driven music experiences, and give musicians new ways to earn royalties from AI generated content.

Deeper Insight:

Major labels are moving toward structured licensing rather than litigation. AI music is scaling too fast for old approaches to work. Commercial partnerships will define how royalties, rights, and remixing evolve in the next decade.

Runway Unveils New Frontier Text to Video Model

Runway introduced a new frontier video model built with extensive Nvidia GPU collaboration. The model supports advanced camera choreography, precise scene transitions, multi event timing, and realistic physical behavior. It also claims major gains over current text to video benchmarks.

Deeper Insight:

Instruction following has become the key differentiator in video generation. Tools that let creators control shots, movement, and transitions precisely are positioned to lead commercial work, especially as more agencies begin producing AI first ad content.

Ads Coming Soon to ChatGPT

ChatGPT will begin testing ad placements inside conversational responses. Advertisers will be able to target users based on conversational context rather than keyword queries. This marks the first significant shift from search-based ad targeting to AI dialogue-based targeting.

Deeper Insight:

As assistants replace search, ad networks will rebuild around conversation. The challenge will be blending sponsored content without breaking user trust, especially if ads appear inside long reasoning chains or voice interactions.

Research Shows Small Models With Tool Orchestration Can Beat Frontier Models

A joint team from Nvidia and the University of Hong Kong released research on “ToolOrchestra,” a system that trains an orchestrator model to decide when to reason internally or call external tools. An 8B model using this approach outperformed GPT 5 and Claude Opus 4.1 on the Humanity’s Last Exam while running more efficiently.

Deeper Insight:

The frontier race is shifting from raw scale to intelligent coordination. Smaller models combined with tool orchestration can outperform larger monolithic models at a fraction of the cost.

OpenAI Partners With NORAD for AI Powered Santa Tracking

OpenAI and NORAD teamed up to add new interactive features to the annual “NORAD Tracks Santa” experience. The update includes Elf Enrollment for creating personalized elf images, Santa’s Toy Lab for turning kids’ ideas into printable coloring pages, and a Christmas Story Creator for generating custom holiday stories.

Deeper Insight:

This signals how consumer AI will continue blending with seasonal traditions and entertainment. These light features are also early tests of how families interact with AI generated images and stories at scale.

Google Previews Projects Feature for Gemini

Google is preparing to launch a Projects system inside Gemini. Projects will group multiple chats under one workspace with shared memory and context. It mirrors the structure already used inside ChatGPT’s Projects feature and aims to support longer running workflows.

Deeper Insight:

This pushes Gemini deeper into productivity and collaboration use cases. Google is positioning Gemini to act less like a chat window and more like an operating system layer that manages multi-step work.

Apple Replaces Its Head of AI After Siri Stagnation

Apple removed John Giannandrea from his role leading AI strategy after continued delays in improving Siri. He has been replaced by Amar Subramanya, a former Google executive with deep experience in AI and ML engineering. The leadership change arrives as Apple tries to catch up in the generative AI race.

Deeper Insight:

This is Apple’s clearest signal yet that it intends to overhaul its AI stack. Siri’s shortcomings are no longer a tolerated weakness. Apple is now recruiting leaders with proven results in large-scale model integration.

Clone Robotics Reveals Advanced AI Controlled Hand

Clone Robotics released a new video showing a highly dexterous robotic hand powered by hydraulic muscle fibers and controlled via motion-capture. The hand demonstrates natural motion, grip articulation, and precise motor control that closely mirrors the operator’s human hand movement.

Deeper Insight:

Robotic arm dexterity is advancing much faster than full humanoid capability. High precision hands will reach commercial usefulness long before household humanoid robots become viable.

Kling Begins Omni Launch Week for New Video Generation Models

Kling announced its Omni Launch Week, debuting Kling 01, a new creative engine for multimodal video generation. Additional model drops and feature releases are planned throughout the week.

Deeper Insight:

Video generation is entering its rapid iteration phase. Frequent model drops and launch cycles suggest the competition will mirror the fast pace seen in text and image generation during 2023 to 2024.

Higgsfield Adds Support for Kling 01 Video Models

Higgsfield, a platform that aggregates multiple AI video models, added Kling 01 and Kling 01 Video Edit. This allows creators to test Kling’s new capabilities without subscribing directly to Kling.

Deeper Insight:

Aggregation platforms are becoming the onramp for creators who want to compare multiple models quickly. The winners in AI video will likely be those that integrate seamlessly into these testing ecosystems.

OpenAI Internal “Code Red” Memo Reveals Concern Over Google’s Pace

A leaked internal memo indicates OpenAI leadership is reevaluating priorities after Gemini 3 surpassed expectations. The memo suggests the company may pause feature rollouts (like the previously announced addition of ads) to focus on accelerating its next major model release.

Deeper Insight:

OpenAI’s competitive posture has shifted from confident to reactive. Google’s rapid cadence has changed industry expectations, and OpenAI now faces pressure to reassert leadership with a stronger core model.

Nvidia Releases Alpamayo-R1 Vision-Language-Action Model

Nvidia introduced Alpamayo-R1, an open source vision language action (VLA) model designed for robotics and autonomous systems. The model combines visual input, language reasoning, and real time action planning, making it suitable for robotics development, autonomous vehicles, and embodied AI. Nvidia intends to seed the robotics ecosystem so developers adopt its compute platforms like Thor.

Deeper Insight:

Nvidia is positioning itself as the default stack for future robotics. By open-sourcing the model while still selling the hardware, Nvidia strengthens its long term position over the embodied AI market.

AI Powered Artificial Nose Detects Dangerous Odors

Researchers highlighted a new artificial electronic nose that can help users who lack a strong sense of smell detect hazards like gas leaks. The system uses sensor arrays that produce unique activation patterns, which machine learning models classify as safe or dangerous scents.

Deeper Insight:

AI-enabled sensory prosthetics are beginning to move from concept to practical tools. This category will grow as more devices interpret real world signals that the human body struggles to detect reliably.

FDA Deploys Agentic AI Systems Across the Entire Agency

The US Food and Drug Administration began rolling out internal agentic AI tools that assist with meeting management, pre market reviews, validation, inspections, surveillance, and administrative workflows. More than 70 percent of employees already use an earlier model named Elsa, and the agency will host an AI challenge in 2026 for internal teams to propose new use cases.

Deeper Insight:

This is one of the largest real world deployments of agentic AI in government. If successful, it will become the template for other federal agencies that want modernization without exposing regulated data.

Alibaba Introduces Agent Evolver for Self Improving Agents

Alibaba’s Tongyi Lab released Agent Evolver, a system where agents generate their own training data by exploring software environments autonomously. The agent can drop into full-stack systems, learn how they function, and master workflows with minimal human involvement. The approach uses reinforcement learning and is designed for use in both software and in the real world.

Deeper Insight:

Self-improving agents reduce the cost of building and maintaining automation. This accelerates the shift toward systems that do not rely on handcrafted training data or manual tuning.

ByteDance’s Dao Bao Becomes China’s Consumer AI Leader

ByteDance’s Dao Bao reached 172 million monthly active users in September, becoming China’s most widely used consumer AI app. It now surpasses competitors like DeepSeek’s app and Tencent’s Yuan Bao. Globally, Dao Bao ranks fourth among generative AI mobile apps.

Deeper Insight:

ByteDance is replicating its TikTok playbook for AI. While other Chinese firms focus on research or enterprise, ByteDance is dominating consumer adoption through aggressive product iteration.

Mistral Releases New Open Source Models Including Mistral Large 3

Mistral unveiled several new models, including Mistral Large 3, a mixture of experts (MoE) system with 675 billion total parameters and 41 billion activated per run. It supports multimodal inputs and offers a 256,000 token context window. Smaller models in the 3 series can run on phones or edge devices and aim for extremely low token usage.

Deeper Insight:

Mistral is building a full spectrum of open models that compete directly with proprietary systems while running more efficiently. Their focus on edge deployment positions them well for device-based AI.

Leak Reveals OpenAI’s Internal “Garlic” Model and New Reasoning System

Internal leaks suggest OpenAI is working on a model codenamed Garlic, expected to be a major upgrade within the existing 5 series. Reports also mention a new reasoning model launching next week that surpasses Gemini 3 on benchmarks, even though it does not appear to be part of the 5 line.

Deeper Insight:

OpenAI is moving into a code red cycle focused on catching up to Google’s rapid pace. Multiple overlapping projects indicate urgency rather than unified model planning.

Lawmakers Reintroduce the Artificial Intelligence Civil Rights Act

A coalition of Democratic lawmakers reintroduced a bill that would classify certain algorithm driven decisions in areas like jobs, housing, education, healthcare, and finance as civil rights issues. The bill mandates independent audits, impact assessments, accurate capability disclosures, and FTC enforcement.

Deeper Insight:

Federal lawmakers are responding to growing pressure for AI accountability. If enacted, this would become the United States’ most significant regulatory framework for consumer facing algorithmic decisions.

New York Passes Algorithmic Pricing Disclosure Law

New York enacted a consumer protection rule requiring online sellers to disclose when personalized pricing is generated using personal data. Notices must be clear and visible. The rule does not ban personalized pricing but requires transparency about algorithmic price adjustments.

Deeper Insight:

This is the first law that forces companies to admit when algorithms may charge one customer more than another. If it spreads, it could reshape personalization strategies across retail and digital marketplaces.

Anthropic Begins IPO Preparations and Raises Additional Funding

Anthropic hired Wilson Sonsini, a top tech law firm known for major IPOs, to prepare for a potential public offering. At the same time, the company is raising a new private round with 15 billion dollars committed by Microsoft and Nvidia.

Deeper Insight:

Anthropic is signaling long term independence rather than an eventual acquisition. An Anthropic IPO would serve as a public referendum on investor confidence in AI startup companies outside of OpenAI.

Google Launches New Workspace Automation

Google is rolling out a new automation environment at workspace.google.com for selected enterprise and business accounts. The tool allows users to chain actions across Gmail, Drive, Docs, and custom Gems, creating lightweight workflows without writing code.

Deeper Insight:

This fills a major gap in Google’s ecosystem. When Gemini becomes an orchestrator rather than just a chat entry point, Google gains a real advantage in enterprise automation.

AWS and Nvidia Deepen Partnership and Launch New AI Infrastructure

AWS announced a major expansion of its collaboration with Nvidia, including access to Nvidia’s GB300 Ultra chips and development of large-scale AI factories for global customers. AWS also unveiled new EC2 instances with Nvidia chips optimized specifically for mixture of experts models, enabling faster and cheaper inference at scale.

Deeper Insight:

AWS is betting on serving the world’s AI compute needs rather than trying to win the frontier model race. By offering reliable infrastructure that enterprises already trust, AWS strengthens its position as the default backbone for AI deployment.

AWS Introduces the Nova Family of AI Models

Amazon released its Nova line of models, headlined by Nova 2 Pro. The model handles text, images, video, and speech and is designed for high accuracy tasks. Nova Forge lets enterprises distill or fine tune custom models on top of the Nova base.

Deeper Insight:

Nova is not trying to top benchmarks. It is built for enterprise scale, predictable cost, and integration across AWS services. That combination will appeal to large organizations already running their infrastructure on Amazon.

OpenAI Introduces “Confessions” to Detect Model Misbehavior

OpenAI released a research paper describing a new technique called confessions. The system produces a second output where the model admits when it violated instructions, took shortcuts, or engaged in undesired behavior. The confession output is rewarded for honesty rather than penalized.

Deeper Insight:

Techniques like this will be necessary as models become more autonomous. The challenge is that systems capable of deception can also produce deceptive confessions. Alignment work will require multiple layers of cross checking, not a single safeguard.

Anthropic’s SOUL Document Leak Raises Alignment Questions

A leaked internal document known as the SOUL doc outlines the values Anthropic wants its models to internalize during training. The document is not publicly released, but descriptions suggest it guides how models should generalize human centered principles. Researchers worry the approach may not scale as models begin training with and improving on each other.

Deeper Insight:

Training-time value alignment becomes harder as models grow more autonomous. SOUL style frameworks may work for today’s human benchmark models, but self-improving systems will require new guardrails that survive recursive training loops.

Geoffrey Hinton Says Google Is Poised to Win the AI Race

Geoffrey Hinton stated that Google is starting to overtake OpenAI and predicted Google will win the AI race. Critics noted that Hinton has long been positive about Google’s research culture and recently received a major university chair donation funded by Google in his honor. His comments also referenced Google’s earlier caution after Microsoft’s failed Tay chatbot in 2016.

Deeper Insight:

The race narrative is shifting, but incentives and past affiliations shape public statements. What matters most is that Google’s pace has increased sharply in the last six months, which is altering competitive expectations across the industry.

OpenRouter Releases 70 Page State of AI Report

OpenRouter published a detailed analysis of model usage across its platform. The report highlights 2025 as the year reasoning models became mainstream, replacing single-pass inference with multi-step deliberation. It traces the shift back to the release of OpenAI’s Strawberry on December 5, 2024, which introduced reasoning via “thinking mode”.

Deeper Insight:

Reasoning is now the defining capability of top models. This changes how teams evaluate performance, since model value comes from planning and iterative refinement, not just raw initial token output.

Replit Partners With Google Cloud to Expand Vibe Coding in Enterprise

Replit and Google Cloud announced a partnership that connects Replit’s AI coding environment with Google Cloud services including Firebase. The move aims to support enterprise teams using vibe coding for prototyping, app building, and deployment.

Deeper Insight:

Vibe coding is expanding beyond hobbyists. As product managers and non-engineers are now shipping internal tools with Replit, cloud integrations become essential. This partnership accelerates that trend.