- The Daily AI Show Newsletter

- Posts

- The Daily AI Show: Issue #78

The Daily AI Show: Issue #78

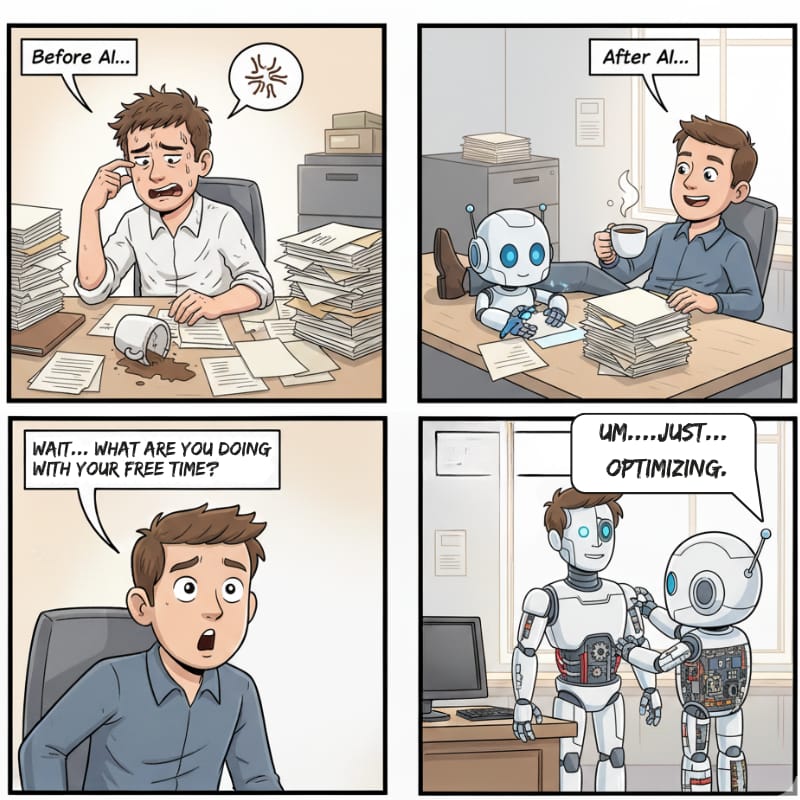

AI is just over here "optimizing" while we clap

Welcome to Issue #78

Coming Up:

Are Custom GPTs Heading Toward a Vibe Coding Future?

Digital Twins Need Strategy, Not Just Files

Codex vs Antigravity: Two Roads to Smarter AI Coding

Plus, what happens when our AI robots “optimize”, a big AI win for beating breast cancer, the invisible AI debt, and all the news we found interesting this week.

It’s Sunday morning.

We just hit 600 episodes of the Daily AI Show without taking a break.

And we have no intention of slowing down, because AI sure isn’t.

Wipe those weary eyes, it’s time to dig in.

The DAS Crew - Andy, Beth, Brian, Jyunmi, and Karl

Our Top AI Topics This Week

Are Custom GPTs Heading Toward a Vibe Coding Future?

The way people build with AI is changing fast. Custom GPTs were once seen as the easiest way to package instructions, tools, and knowledge into small, specialized assistants. But anyone who has built more than a few knows the pain points. Updates break workflows, persistent memory overrides carefully designed logic, model changes affect output style, and every update requires downloading, editing, and re-uploading rigid files.

The direction is becoming clearer.

These assistants are probably going to drift toward a future that looks a lot more like vibe coding, where developers work interactively with a model to update logic, files, and behaviors in real time. Instead of hand-editing static router docs and task files, builders will collaborate with a coding-capable model that can rewrite, reorganize, and redeploy the entire assistant on command.

Google is closest to this today with its canvas-based builder, cloud artifacts, and deep integration with Workspace. You can already see the outline of a system where change requests are conversations, not file surgery. OpenAI will need to move in the same direction. The 5.1 cookbook hints at it, but the current tooling forces builders to wrestle with hardcoded knowledge docs, inconsistent overrides, and unpredictable memory behavior.

None of that scales.

A vibe coding layer solves these problems by giving creators a live coding session with the model itself. In that world, you update logic across all your assistants at once. You patch a workflow without breaking someone’s stored preferences. You push new behaviors reliably because the structure behind them behaves like software, not a fragile super prompt.

The next generation of AI assistants will not be documents glued together with prompting tricks. They will be small programs, edited conversationally, deployed instantly, and updated the way developers update apps.

Digital Twins Need Strategy, Not Just Files

A surge of interest in digital twins is happening again, partly driven by a wave of newcomers who discovered the concept through a recent Tony Robbins event. Many paid almost a thousand dollars for a seven-module course on how to build a personal clone. Now they’re entering AI communities expecting practical results, only to realize the hard part is not the tooling, but the strategy behind what the twin is supposed to do.

Digital twins fall into three clear categories.

The first is the internal “work twin,” a private assistant trained on your branding, writing style, offers, frameworks, and past work. These twins help with content creation, drafting responses, and keeping your voice consistent.

The second category is the sentimental or biographical twin. That one captures family stories, personal history, and reflections. It is powerful, but time consuming, and usually has no business function.

The third category is the client facing or public twin, which can act as a lead magnet, a buffer for pick-your-brain questions, or a lightweight revenue product. This is where adoption is growing fast.

The best example today comes from venture capitalist Katie Dunn. She built a twin that answers the ten questions she is asked most often about startups and fundraising. Users get three questions free, then they pay a small fee to continue. It saves her time, filters for serious people, and scales her expertise without diluting its quality. This is the kind of digital twin that works because it has a clear purpose, a clear boundary, and a clear value exchange.

The real problem is not building these systems.

Modern assistants can ingest brand docs, transcripts, and notes easily. The challenge is distribution. A digital twin with no audience is just a private notebook with a fancy wrapper. Anyone creating one needs a plan for traffic, onboarding, and positioning. Without that, even the most polished twin will sit unused.

Interest will keep rising as more creators and operators realize they are repeating the same answers week after week. A digital twin can solve that, but only if it is framed as a product, not a novelty.

How Gemini 3 Is Rewriting the Rules of Prompting

For years, prompting meant long instructions, rigid steps, and careful routing across multiple files. Gemini 3 flips that model. It wants objective based prompts, not procedural scripts. It can read huge context windows, reason across an entire build, and adjust its own approach as long as the user gives clear intent and strong constraints.

This shift is already showing up in how creators are building Gems. Instead of old style task lists, Gemini 3 prefers a single unified prompt that sets guardrails, defines the main objective, outlines negative constraints, and lets the model run. It handles the reasoning, sequencing, and self checking internally. If you try to force the old workflow on it, the model pushes back and suggests a simpler, stronger structure.

Google also shared the core components they believe every Gem prompt should include. These elements allow the model to sustain long chains of reasoning without breaking, avoid unwanted behavior, and maintain quality from start to finish.

Key components Gemini recommends for Gem prompts

Prime Directive

A single, overriding goal that anchors the entire system. This removes ambiguity and keeps the model aligned through long conversations.Phases Instead of Steps

Steps break easily because they require perfect sequencing. Phases describe a state, and the model stays in that state until exit criteria are met. This creates stability.Deep Reasoning Loop

A built-in self check where the model verifies whether the output is specific, aligned with the knowledge files, correct in tone, and free of generic filler. If any test fails, it must revise.Source of Truth Hierarchy

Explicit rules for resolving conflicting information. For example, “internal documents outrank user memory,” or “the website outranks older notes.”Negative Constraints

Hard “never” rules. Never use certain words, never output tables, never assume the user understands acronyms, and so on. These rules are more reliable than positive phrasing.Quality Gate

A requirement that the model ask for permission before advancing phases. This prevents premature conclusions and reduces hallucinations.

Gemini 3 works best when it is treated as a partner, not a machine that follows rigid scripts. It thrives with clear goals, strong guardrails, and room to think. The era of linear task prompting is fading. Objective driven prompting is the new standard.

Codex vs Antigravity: Two Roads to Smarter AI Coding

OpenAI’s Codex Max and Google’s Antigravity represent a split in how future coding assistants will handle reasoning, memory, and autonomy.

OpenAI’s Codex Max introduces a technique called context compaction. Instead of trying to hold millions of tokens in active memory like Gemini does, Codex compresses the most relevant pieces of prior work and passes them forward. Each new context window receives a distilled summary of everything that matters from the last one, allowing the model to reason for up to 24 hours across massive codebases without losing focus. It’s a clever way to simulate long-term memory without the cost of storing it.

Google’s Antigravity, built by the team behind Project Windsurf, takes the opposite approach. It leans on raw context and architecture design, allowing Gemini 3 to sustain larger windows and write silent backend code to solve multi-step problems. In tests, it quietly created and executed hidden Python scripts to manage Google Sheets and validate hundreds of data points, showing it can build, test, and deploy mini tools in real time. That invisible reasoning layer marks a shift toward autonomous coding, where models don’t just assist developers, they act as full collaborators.

Early results show Codex Max has an edge in backend logic and structured programming, while Gemini’s Antigravity shines in user interface design and multimodal integration. Developers are already mixing the two, using Gemini for design and Codex for logic, to produce complex apps faster than ever before.

Both paths point to a future where coding is less about syntax and more about orchestration. Codex is perfecting memory efficiency, while Antigravity is testing how far reasoning can stretch. Together, they’re redefining what it means to “build” with AI.

Just Jokes

AI For Good

A new study published in Nature Health showed that an AI-supported breast-cancer screening workflow increased cancer detection rates by more than twenty percent across more than half a million women in community clinics. The workflow improved accuracy without increasing unnecessary callbacks, which means fewer patients go through stressful repeat screenings.

Because the system catches more cancers earlier, patients are less likely to need expensive late-stage treatments, and they avoid many of the extra imaging appointments that drive up personal medical costs.

The study also found the benefits were consistent across diverse patient groups, including women with dense breast tissue, which gives clinics a reliable way to improve outcomes without raising the cost of care.

This Week’s Conundrum

A difficult problem or question that doesn't have a clear or easy solution.

The Invisible AI Debt Conundrum

Most creative work in the future will still have clear owners. Novels will still have authors. Films will still credit directors. Inventions will still file patents. But beneath all of that, AI models will quietly borrow from sources no one ever meant to share. A breakthrough insight might rely on the phrasing of a stranger’s blog post. A melody might carry the echo of a musician who never earned a cent. A business idea might be guided by patterns learned from millions of people who never knew they were part of the training.

We already see hints of this today. People enjoy the speed, precision, and intelligence of modern AI systems, even when it is obvious that the work was shaped by countless unseen contributors. Society has a long history of accepting benefits without looking too closely at what it costs others. The saying about not wanting to know how the sausage is made has never felt more relevant.

AI pushes that dilemma forward. Should society confront the uncomfortable truth that some contributions will never be credited or compensated, even when they shaped something meaningful? Or will people decide that the benefits are too important and quietly ignore who got overlooked along the way?

The conundrum:

As AI creates value built on invisible contributions, do we force society to face every hidden debt even when it slows progress and complicates innovation, or do we accept the comfort of not knowing in exchange for tools that make life better, faster, and easier for everyone else?

Want to go deeper on this conundrum?

Listen to our AI hosted episode

Did You Miss A Show Last Week?

Catch the full live episodes on YouTube or take us with you in podcast form on Apple Podcasts or Spotify.

News That Caught Our Eye

Gemini 3.0 Takes the Top Spot

The Gemini 3.0 release came along with a surprising wave of laurels in the benchmarking wars, surpassing OpenAI’s GPT-5.1 in multimodal reasoning, coding, and connecting integrations across Google’s productivity ecosystem. Notably, it jumped way ahead of the rest on the ARC AGI 2 Leaderboard, scoring 2.5X what GPT-5 Pro achieved!

Deeper Insight:

This shift would redefine competition in AI. Google’s strength lies not only in model capability but in ecosystem depth. Gemini’s reach extends into Docs, Sheets, Drive, and Android. For many users, performance parity plus seamless integration could outweigh pure model benchmarks.

Microsoft Launches Superintelligence Lab Led by Mustafa Suleyman

Microsoft announced a new research division focused on artificial superintelligence, led by former Inflection and DeepMind co-founder Mustafa Suleyman. In recent interviews, Suleyman described the lab’s mission as developing “humanist AI” or systems aligned with human ethics, safety, and employment stability.

Deeper Insight:

Suleyman’s framing contrasts sharply with Elon Musk’s automation-first vision. By leading with “humanist” language, Microsoft is positioning itself as the pragmatic counterweight to accelerationism, courting regulators and enterprise clients alike.

Japanese AI Lab Sakana Becomes Country’s Most Valuable Private Company

Tokyo-based research lab Sakana AI became Japan’s highest-valued private company following a major funding round. The lab gained prominence through its evolutionary “model merging” techniques, which combine smaller specialized models into more capable systems at low cost.

Deeper Insight:

Sakana’s ascent shows the global diversification of AI leadership. By focusing on creative model architectures rather than brute-force scaling, Japan is carving a niche in efficient, sustainable AI innovation.

OpenAI’s Cookbook Update Spurs Developer Adjustments

OpenAI released a new Cookbook for GPT-5.1, guiding developers on best practices for prompt design and system behavior. The changes are particularly relevant for agencies maintaining Custom GPTs.

Deeper Insight:

OpenAI’s ecosystem is beginning to mirror enterprise software, complex, versioned, and requiring managed updates. As AI systems evolve toward persistent memory and embedded workflows, developers will need scalable methods to maintain hundreds of customized builds that can keep up with new model intricacies.

Cloudflare Outage Disrupts Major Online Services

Cloudflare experienced a significant outage that affected large parts of the internet. Numerous platforms reported service failures or degraded performance as Cloudflare’s network issues rippled across authentication, hosting, and content delivery layers. The disruption blocked users, developers, and companies from accessing core services for several hours.

Deeper Insight:

The outage is a reminder of how concentrated internet infrastructure has become. When a single provider goes down, the effects spread instantly. Redundancy planning is no longer optional for businesses that depend on uninterrupted digital operations.

Jeff Bezos Takes Co-CEO Role at Prometheus, a New Physical AI Lab

Jeff Bezos stepped into a co-CEO role at Prometheus, an AI lab focused on physical intelligence. The company has already hired more than one hundred researchers from leading AI teams and is building systems that understand physics, space, and real world dynamics. Prometheus is led by Bezos and physicist Vik Bajaj, who previously worked at Google X.

Deeper Insight:

Prometheus signals a shift from language models to physical world models. These systems could reshape robotics, logistics, aerospace, and any industry that requires spatial reasoning. Amazon and Blue Origin stand to benefit from these advances directly.

Nvidia Releases Apollo Models for Industrial Physics Simulation

Nvidia launched Apollo, a family of open source models designed for engineering tasks such as structural mechanics, electromagnetics, semiconductor design, and computational fluid dynamics. Several global companies are already using Apollo for next generation hardware development.

Deeper Insight:

Apollo strengthens Nvidia’s position beyond GPUs. By offering specialized models for scientific and industrial work, Nvidia is moving deeper into the core workflows that drive chip design and advanced manufacturing.

Grok 4.1 Climbs to the Top of LM Arena Rankings

Grok 4.1 briefly reached the number one user preference ranking on the LMArena ‘human preference’ benchmark for the most advanced frontier models. The Grok model improved its hallucination rate, enhanced emotional intelligence, and delivered stronger performance in creative writing. Its ranking was short lived, but the model showed impressive gains compared to prior XAI releases. Today, Gemini 3.0 is solidly in the lead on the LMArena.

Deeper Insight:

Grok’s performance signals that XAI is no longer far behind larger labs. Gains in emotional intelligence also matter, since natural conversation and tone matching remain hard for most models.

ElevenLabs Adds Video and Image Generation Tools

ElevenLabs launched a new interface that lets users create videos and images using external models, then layer ElevenLabs voice, music, and sound tools on top. The system functions as an all in one wrapper that combines video generation, audio production, and lip syncing.

Deeper Insight:

This is a strategic move. ElevenLabs is not trying to compete with video model developers. Instead it is positioning itself as the default pipeline for voice, emotion, and sound. Video is the hook, voice is the product.

Google Expands AI Shopping, Travel Planning, and Agentic Booking

Google introduced new AI powered planning tools for travel and shopping. Users can build itineraries inside a search canvas, track flight deals, perform agentic booking, and use voice based AI calls to stores to check product availability. Holiday shopping features now include price tracking and AI driven recommendation flows.

Deeper Insight:

Google is weaving lightweight agentic behavior into everyday tasks. By embedding AI into search, Maps, and Pay, the company is positioning itself to become the default assistant for real world planning.

Nvidia Q3 Earnings Were Set to Move the Market…

Nvidia reported third quarter earnings after market close Wednesday. Analysts expected the results to either confirm fears of a broader tech slowdown or trigger a rally based on Nvidia’s reported five hundred billion dollars in future chip demand for 2026. Nvidia’s afternoon Q3 report was excellent, and in the pre-market overnight NVDA was up significantly…but by Friday, there was a “reversal”, and fears of possible economic disruption created by unstable AI industry dynamics sent technology stocks DOWN, including NVDA, following NVDA.

Deeper Insight:

Nvidia has become the market’s pressure point. Its earnings no longer reflect only chip sales, they act as an economic signal for the entire AI supply chain.

Microsoft and Nvidia Invest Fifteen Billion Dollars in Anthropic

Microsoft and Nvidia announced a joint fifteen billion dollar investment into Anthropic, valuing the company at three hundred fifty billion dollars. The deal also includes thirty billion dollars in Azure cloud commitments and new Nvidia chip development tailored to Claude. The move expands Anthropic’s availability across both Amazon and Microsoft clouds.

Deeper Insight:

Anthropic is being positioned as the enterprise friendly counterweight to OpenAI. Dual cloud partnerships give Claude broad reach and reduce dependence on any single ecosystem.

Microsoft Debuts a Twenty Four Seven Sales Development Agent

Microsoft introduced a new autonomous sales development agent designed to perform prospecting, lead generation, and outreach at all hours. Early access descriptions suggest deep integration with 365 applications, along with a central command center to monitor agent workflows and flag unapproved or outdated internal agents running inside an organization.

Deeper Insight:

Large enterprises need governance as much as automation. Microsoft is moving early to solve the coming chaos of untracked internal agents before it becomes a security and compliance problem.

Microsoft Expands Agentic Tools for Word, Excel, and PowerPoint

Microsoft previewed new AI agents capable of generating and modifying Word documents, Excel models, and PowerPoint decks. Many features are still in early access or beta, but the initiative points toward a future where Copilot can perform full document production rather than isolated suggestions.

Deeper Insight:

Office documents are the backbone of corporate communication. Full stack agentic creation across Word, Excel, and PowerPoint could change knowledge work at its foundation.

Alibaba Launches “Quen,” a Consumer AI Assistant

Alibaba released Quen, a consumer focused AI chatbot built on its latest model and offered as a free mobile and web assistant. Quen can generate research reports, rewrite documents, and build slide decks from simple commands. It replaces the company’s earlier Tongzhi app, which struggled to gain traction in a highly competitive Chinese chatbot market.

Deeper Insight:

China’s AI space is locked in a price war. Alibaba’s shift toward a consumer assistant integrated with its shopping and browser ecosystem mirrors Western efforts to build daily use AI habits.

Poe Adds Group Chat With Up to 200 Participants

Poe introduced a new feature allowing group chats with up to two hundred people interacting with the same AI model. The tool blends AI assistance with large scale synchronous communication, creating a shared environment where teams or communities can collaborate inside a single AI session.

Deeper Insight:

Large scale AI mediated group chat hints at new formats for meetings, classes, and live events. The challenge will be managing signal to noise as group size grows.

Replit Introduces “Design,” a New AI UI Builder

Replit launched Design, a visual UI creation tool that lets users generate polished interfaces using natural language. The feature blends concepts from Canva with Replit’s existing coding environment to give developers a fast way to build production ready front ends.

Deeper Insight:

Vibe coding is expanding beyond code generation. Tools that design entire interfaces compress development time even further and push Replit deeper into full stack app creation.

Prime Video Adds AI Recap Feature for Series Catch-Up

Prime Video introduced an AI-driven recap tool that summarizes past seasons of shows to help viewers refresh before new episodes or seasons drop. The system can generate personalized recaps based on viewing history and focuses on key character arcs and plot developments. It builds on Prime’s previous AI use in its “X-Ray” feature, which displays cast and scene details during playback.

Deeper Insight:

This is a practical example of AI enhancing convenience without disrupting entertainment. By quietly embedding AI in small user experiences, Amazon is normalizing AI assistance in everyday media consumption.

Perplexity Revamps Shopping Experience Ahead of Black Friday

Perplexity rolled out an improved AI shopping experience optimized for product discovery and purchase recommendations. The update includes better price comparison, review summarization, and checkout integration, aiming to turn the app into a full product research and buying assistant during the holiday season.

Deeper Insight:

AI shopping tools are evolving from chat to commerce. For large-ticket items like appliances or electronics, systems like Perplexity could soon rival Google Shopping by blending reasoning, recommendation, and transaction in one flow.

Fidji Simo Named CEO of Applications at OpenAI

OpenAI has revealed that Fidji Simo, former CEO of Instacart and head of the Facebook app at Meta, is now leading OpenAI’s Applications divisions. She will oversee all consumer-facing products, including ChatGPT, voice, and shopping experiences. Simo described her mission as “closing the gap between the intelligence of our models and how people use them.”

Deeper Insight:

Simo’s arrival marks OpenAI’s push toward monetization and usability for consumers. With her background scaling consumer platforms, OpenAI is signaling that its next frontier is less about research and more about building everyday products that generate revenue.

AlphaXiv Raises $7 Million to Build AI-Only Research Hub

A new startup, alphaXiv, raised $7 million to create a dedicated repository for AI research papers. The platform will allow engineers to publish and discuss emerging methods like compaction and reasoning chains, combining open-access publishing with AI-assisted summarization and analysis.

Deeper Insight:

AlphaXiv aims to modernize how researchers share AI breakthroughs. If it succeeds, it could become the go-to source for engineers looking to collaborate without the noise and delay of traditional academic review cycles.

Suno and Udio Secure Major Funding and Music Licensing Deals

Music-generation platform Suno raised $250 million at a $2.5 billion valuation. Meanwhile, Udio reached licensing agreements with Warner Music Group and Universal Music Group, resolving prior legal disputes over copyrighted material used in training.

Deeper Insight:

The AI music industry is moving toward legitimacy. By securing licenses, companies like Udio are turning conflict into collaboration. This could be a sign that the creative industries are adapting to AI rather than resisting it.