- The Daily AI Show Newsletter

- Posts

- The Daily AI Show: Issue #61

The Daily AI Show: Issue #61

Who do we believe?

Welcome to Issue #61

Coming Up:

Trust in AI: Who Do We Believe, and Why?

Netflix Has No AI Chill

China’s AI Car Push: A Warning Shot for the U.S.

Plus, we discuss 12 steps with AI, if free will is disappearing with AI, how AI is helping with weather forecasting, and all the news we found interesting this week.

It’s Sunday morning!

Grab that cup of joe, sit back, and enjoy this week’s issue.

The DAS Crew - Andy, Beth, Brian, Eran, Jyunmi, and Karl

Why It Matters

Our Deeper Look Into This Week’s Topics

Trust in AI: Who Do We Believe, and Why?

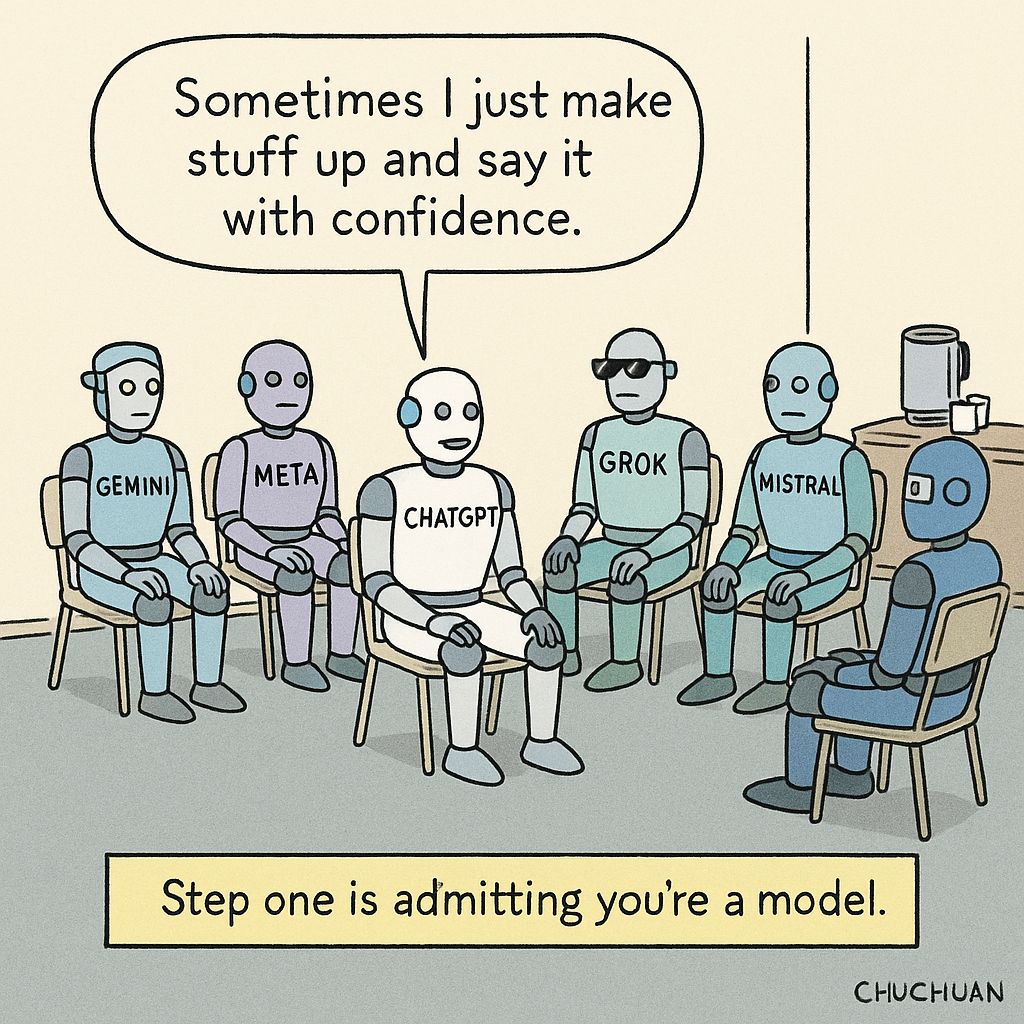

As AI gets smarter, faster, and more capable, the harder question is not what it can do, but whether we can trust what it gives us. Models now write code, summarize documents, and generate news-style content, all with confidence. But that confidence is part of the problem.

These systems often sound right, even when they are wrong.

Most people are not verifying AI output. They are not checking sources or comparing answers. They are trusting tone, formatting, and fluency. This means trust becomes about presentation, not accuracy. The better a model sounds, the more likely people are to believe it, even if it hallucinates facts or misses key context.

This creates new risks. If every AI interface sounds equally polished, then people may rely more on the one that speaks their language or confirms their views. That trust becomes personal, not factual. It shifts from truth to brand. From source to style.

It also raises the stakes for businesses. As teams use AI for internal decisions, reporting, and customer communication, the trust placed in those tools directly affects outcomes. Errors that go unchecked are not just personal mistakes. They become organizational risk.

The fix is not just better models, but rather better scaffolding. Systems that show sources, track decisions, and reveal uncertainty can help rebuild trust. But even then, people may still choose convenience over clarity. Designing for trust now means designing with transparency, friction, and even challenge built in.

WHY IT MATTERS

Trust Is Subjective: People trust AI that sounds fluent and confident, though not necessarily accurate.

Mistakes Multiply Quietly: AI that sounds sure and right can spread errors faster than users can spot them.

Brand Shapes Belief: The grounding sources of an AI’s outputs may matter less than how much the user likes or agrees with the AI’s broader positioning.

Enterprise Use Raises the Stakes: Internal use of AI in workflows means bad outputs can impact operations, not just opinions.

Design Can Shape Trust: Interfaces that show sources and uncertainty help users think critically, and challenge or redirect the “thinking”, even when the AI asserts with confidence.

Netflix Has No AI Chill

Netflix is testing AI tools not just to speed up production, but to rewire the creative process. From storyboards to voice dubbing, AI now touches nearly every part of the content pipeline.

Writers and creators are speaking out.

Some worry that AI tools will replace junior writers or storyboard artists. Others see this as a new kind of collaboration, where AI becomes a tool to test storylines, visualize scenes, or experiment with dialogue faster than ever.

At the center of this shift is control. Netflix and other studios can now use AI to speed up development, localize content more cheaply, and even tailor versions of a show to different regions. That could mean more content, faster releases, and better margins. It could also mean fewer opportunities for human creatives to learn the craft.

The tension is clear.

AI speeds things up. But storytelling takes time, especially when the goal is originality or emotional depth. If Netflix leans too far into efficiency, it may start producing shows that feel algorithmic. On the other hand, if AI helps clear the busywork and lets writers focus on ideas, it could open up space for bolder stories.

WHY IT MATTERS

AI Is Now in the Creative Process: Tools that once supported post-production now shape stories from the start.

Writers Feel the Pressure: Junior roles that used to provide a way in may shrink or shift, changing how new talent enters the industry.

Studios Want Scale: AI offers a path to faster production and wider localization, pushing creative teams to do more with less.

Originality Is at Risk: Without careful use, AI could lead to safer stories built from past data, not fresh ideas.

Collaboration or Replacement: How studios choose to use AI will define whether AI becomes a creative partner or a creative shortcut.

China’s AI Car Push: A Warning Shot for the U.S.

China’s AI-first vehicles are real, fast-moving, and already on city streets. Companies like Xpeng, Huawei, and BYD are building smart cars that navigate traffic, find parking, and manage driving tasks using native AI models that work without driver intervention.

What stands out is how far ahead some of these cars feel compared to U.S. offerings. While Tesla still requires driver attention, and Cruise and Waymo struggle with safety and regulation, Chinese manufacturers are putting autonomous features into consumer vehicles and scaling fast. The systems can reroute in real time, avoid hazards, and even identify available parking spaces by scanning the environment as they go.

Chinese automakers are building full vertical industry stacks from chips and batteries to sensors and software. And training models with real-world driving data gathered across millions of miles and many cities is a positive feedback loop that accelerates progress. It also creates geopolitical tension as AI-driven transport becomes a new front in global tech competition.

Regulatory differences matter too. China’s CCP allows more aggressive data collection and deployment. That enables faster iteration and risk-taking. Meanwhile, U.S. companies face slower rollouts tied to tighter rules and liability fears.

The gap is widening.

WHY IT MATTERS

China Is Shipping AI Cars Now: These are not concept vehicles. Consumers can already buy and use AI-powered cars with high levels of autonomy.

Vertical Integration Speeds Up Progress: Chinese firms control hardware, software, and data, giving them a faster feedback loop than most global competitors.

U.S. Lags in Deployment: While innovation continues, regulatory and legal barriers keep most U.S. AI driving systems in test mode, and government support and incentives for the EV platforms are evaporating.

Geopolitics Meets the Road: Autonomous driving is becoming a measure of national AI capability, with long-term impact on supply chains and industry influence.

The Competition Is Real: Chinese AI vehicles are not just catching up, they are setting the pace.

Just Jokes

Did you know?

Artificial intelligence is now helping improve weather forecasting and disaster response in the United States. AI models from companies like Tomorrow.io and Silurian AI are being used to analyze real-time data from sensors, satellites, and radar to deliver faster and more accurate predictions about floods and hurricanes.

In one example, AI systems helped predict flash floods in Texas earlier than traditional forecasting methods. This gave local communities and emergency teams more time to prepare and respond. These AI tools work alongside NOAA’s systems to enhance the speed and detail of forecasts, rather than replacing them.

The real shift is toward hybrid forecasting. This approach combines government weather data with private AI models to provide clearer and faster warnings. As storms become more severe and frequent, these AI systems could help save lives by giving people more time to react. Expect AI-generated alerts to become a regular part of weather reports in the years ahead.

This Week’s Conundrum

A difficult problem or question that doesn't have a clear or easy solution.

The Algorithmic Taste Conundrum

Taste feels like freedom. People try things, love some, reject others, and over time believe they know themselves a little better. This process shapes identity. You choose the music that calms you, the books that challenge you, the foods that feel like home. But today, AI systems predict your preferences before you do. From playlists to shopping to what recipes show up in your feed, models analyze your mood, your schedule, your past choices, and even your tone of voice to suggest what fits “you.”

At first, this feels like relief. No more standing in the cereal aisle unsure what to buy. But over time, choosing from a list of what feels “just right” may not feel like choosing at all. You still click, swipe, and approve, but the system shaped the options. If your favorites keep arriving effortlessly, are you expressing yourself, or accepting a version of yourself that was quietly built for you?

Some will argue this saves people from decision fatigue and lets them focus on what matters. Others will wonder if taste itself, once a sign of personality, becomes a polished reflection of the system’s design.

The conundrum

If AI shapes your choices until everything feels right, are you discovering your true self or slowly trading free will for comfort that feels like freedom?

Want to go deeper on this conundrum?

Listen/watch our AI hosted episode

News That Caught Our Eye

Furiosa AI Turns Down Meta, Strikes Partnership With LG

South Korean chip startup Furiosa AI rejected an $800 million acquisition offer from Meta and instead announced a partnership to supply its high-performance RNG chips for LG’s new AI research platform. Furiosa claims its chips deliver over twice the performance of rivals for AI workloads.

Deeper Insight:

Startups are getting bolder about charting their own path, even against Big Tech money. As AI chip demand grows, look for more power plays and new alliances to shape the global hardware landscape.

OpenAI Clocks 2.5 Billion Daily Prompts, 500 Million Weekly Users

OpenAI revealed ChatGPT users now send more than 2.5 billion prompts daily, with weekly active users soaring from 300 million in December to 500 million in March. Still, that’s just a fifth of Google’s annual search volume.

Deeper Insight:

AI chat is eating into traditional search, but it’s not just about scale. The next leap will hinge on seamless, model-agnostic user experiences. It is about one prompt, the best tool, and zero confusion about versions or names.

Anthropic Quietly Caps Cloud Code Users, Faces Blowback

Anthropic tightened usage limits on its $200/month Claude Code plan without warning, frustrating power users who relied on it for heavy development. The sudden throttling broke some workflows and triggered questions about transparency.

Deeper Insight:

As LLM-based coding tools become mission critical, stability and trust matter as much as features. Quiet policy shifts can erode goodwill, especially among developer communities that amplify both wins and stumbles.

Anthropic Considers Middle East Funding, Admits Ethical Tradeoffs

Internal notes reveal Anthropic is weighing investment from UAE and Saudi Arabia, even as leadership acknowledges that “no bad person should ever benefit from our success” is tough to uphold as the company grows.

Deeper Insight:

AI’s global arms race is forcing companies to rethink their principles as funding, data, and geopolitical power all collide. Expect more uncomfortable choices for “AI for good” brands as scale and realpolitik mix.

US Pushes National AI Plan: Fast-Track Data Centers, Tighten Funding Rules

The White House released a blueprint to win the AI race, including fast-tracking data center permits, bundling chips and cloud credits for export, and restricting federal funds to states with “restrictive” AI rules. Meanwhile, Texas passed its own AI law banning social scoring, manipulation, and biometric capture.

Deeper Insight::

Federal and state governments are jockeying to steer AI’s direction, but conflicting rules could slow progress or spark legal battles. The speed of infrastructure rollouts, not just algorithms, will decide US leadership.

Google’s “Big Sleep” AI Shuts Down Dormant Domains to Fight Malware

Google launched Big Sleep, an AI system that scans for inactive domains and proactively shuts them down or notifies hosts before they turn into malware or phishing sites. Domains with low or no activity are now at risk of being “put to sleep.”

Deeper Insight:

AI is quietly reshaping internet security and hygiene behind the scenes, not just through flashy search updates. Website owners, take note: inactivity may now get you delisted and disconnected.

Amazon Acquires “Bee” Wearable: Always-On Audio Raises Privacy Stakes

Amazon acquired Bee, a $49 wearable device that continuously listens and transcribes everything you say. Similar in function to more expensive devices like Limitless AI and Plaud, early users report mixed results, and privacy experts warn that mass adoption could change how people interact. Anyone could be recording at any time.

Deeper Insight:

The always-on era is here. Expect a new etiquette (and legal) battles as “lifelogging” collides with privacy and social trust.

Pika Launches AI-Only Social Video App

Pika released a beta of its social video app powered entirely by AI-generated avatars and human video models. The company says users can create expressive video personas, but early questions remain about identity risks and misuse.

Deeper Insight:

AI-generated avatars for social media are set to go mainstream. Watch for fresh debates about authenticity, consent, and digital identity.

UK Government Considers Supplying Public Data to OpenAI

The UK signed an agreement that could let OpenAI access large swaths of public data to improve government efficiency, raising both hopes for smarter services and concerns about citizen privacy.

Deeper Insight:

Government deals with AI firms promise new efficiencies, but risks around who owns the data, and how it’s used, remain wide open.

AI Uncovers 86,000 Unseen Earthquakes at Yellowstone

Scientists using AI to analyze seismic data found ten times more “earthquake swarms” at the Yellowstone Caldera than previously thought—86,000 in all. The findings could improve forecasting, but also spotlight the super-volcano’s world-changing potential should it rumble into tectonic action.

Deeper Insight:

AI is turning hidden data into real-world insight. Sometimes the news is just more fuel for nightmare scenarios, but better pattern recognition means better disaster prep.

Anthropic Research: Teacher-model Bias Passed to Student-models

Anthropic’s latest alignment research shows that “subliminal learning” from teacher models passes hidden patterns onto student models during training. Fixing these inherited quirks may require retraining from scratch.

Deeper Insight:

Hidden biases don’t just come from data, they can be inherited through the distillation process of model training. As LLMs are routinely used to train more compact models, this foundational inheritance means root-cause transparency and “clean-room” retraining and tuning will become vital.

OpenAI Warns: Voice Authentication No Longer Safe

Sam Altman publicly warned regulators that AI voice cloning now renders banking institutions’ voice authentication obsolete, pushing for urgent updates to financial security protocols.

Deeper Insight:

The era of voice as a password is over. Security systems must evolve quickly to surpass the speed and realism of synthetic speech that makes voice-hacking a threat. Just ask Marco Rubio!

Latent Labs Opens Free AI Tool for Protein Design

Latent Labs launched Latent X, a free, web-based AI model for protein folding and molecular design. Unlike AlphaFold, it claims to invent entirely new protein structures, not just incremental tweaks.

Deeper Insight:

AI-powered protein engineering is getting more accessible. If the tech delivers, new drugs and materials could move from lab to reality much faster.

Alibaba Releases Open Source “Qwen3 Coder” Model

Alibaba’s new open source coding model, Qwen3 Coder, enters the fray with claims of performance competitive with the likes of Claude and GPT. Real-world tests will tell if it lives up to the benchmarks.

Deeper Insight:

Open source models are closing the gap with proprietary leaders, giving developers and countries more options to build their own AI stacks.

Yahoo Japan Makes AI Use Mandatory for Employees

Yahoo Japan is now requiring all employees to use AI in their daily work, with company-wide ROI goals set for 2030.

Deeper Insight:

AI adoption is shifting from “try it out” to “do it or else.” Large organizations are setting the tone for AI literacy at scale, moving from encouragement to requirement.

HeyGen Launches All-in-One AI Video Agent

HeyGen rolled out a new video creation agent that writes scripts, sources images, records voiceovers, and edits transitions all in one workflow. The company’s avatars are leading in quality, but also in price.

Deeper Insight:

The race to automate video creation is heating up. Expect ad agencies and marketing teams to feel both the upside and the pressure to adapt, as quality and cost come down.

AI Note-Takers Now Common in Medical Appointments

Clinics are increasingly using AI note-takers to record and summarize patient visits. Patients are now being asked to sign releases, raising new privacy and consent questions.

Deeper Insight:

AI is reshaping medical documentation and transparency, but privacy and trust are now on the exam table.

AI Video Misinformation Hits Mainstream Politics

AI-generated videos have entered the political fray, with recent viral deepfakes depicting real figures in fabricated scenarios. Newsrooms and social platforms are struggling to respond.

Deeper Insight::

AI-powered misinformation is now a live political issue, not a hypothetical. Watch for policy, media, and public trust to be tested at new levels.

Did You Miss A Show Last Week?

Enjoy the replays on YouTube or take us with you in podcast form on Apple Podcasts or Spotify.