- The Daily AI Show Newsletter

- Posts

- The Daily AI Show: Issue #60

The Daily AI Show: Issue #60

Centaur already knew what you were thinking

Welcome to Issue #60

Coming Up:

AI Companions: Solving Loneliness or Replacing People?

Agent Mode: ChatGPT’s Next Big Step

Are We Training Thinking Machines?

Plus, we discuss AI advances in heart health, what sincerity means in a future filled with AI automations, and all the news we found interesting this week.

It’s Sunday morning!

As Jimmy Buffet once said,

“What I'm trying to say is tomorrow's today, and we've got to do it all over again.”

So let’s do this thing,

The DAS Crew - Andy, Beth, Brian, Eran, Jyunmi, and Karl

Why It Matters

Our Deeper Look Into This Week’s Topics

AI Companions: Solving Loneliness or Replacing People?

Apps like Replika, Character.ai, and XiaoBing are helping millions of people meet their emotional needs through conversations with AI. These relationships range from friendly chats to deeper emotional support, and in many cases, romantic or sexual simulations.

The timing matters. Many societies face growing isolation and shrinking social circles. Human interaction now often means texting instead of face-to-face conversation. AI companions step into that gap. They provide attention, validation, and constant availability. They never get tired or bored. They are designed for active listening without judgement.

For some, this offers real support. People with social anxiety, physical limitations, or those living alone now have a form of connection they lacked before. For others, the concern is clear. If your AI friend always agrees with you, never challenges you, and always tells you what you want to hear, does that push you further from the reality of unpredictable human relationships?

Can human-to-human interaction compete with a perfect, tireless digital companion?

The bigger question is whether this trend may encourage people, build their self-confidence and lead to re-entry in human spaces, or if it will become an easy escape from the risks and hard work of real relationships. Like social media before it, AI companions could bring both connection and isolation, depending on how they are designed and how real humans receive them.

WHY IT MATTERS

Loneliness Creates Demand: Millions are turning to AI for friendship and emotional support, showing that the need for connection is real.

Companies See a Huge Market: AI companionship is becoming a multibillion-dollar industry as apps race to capture users who seek conversational companionship.

Support or Addiction: Constant validation from AI companions can help or harm, depending on whether they encourage growth or just reinforce isolation.

Robots May Replace More Than Work: As AI companions improve and expand their range of interaction modes, human-to-human intercourse could feel like a chore in comparison.

Design Choices Will Shape Outcomes: Whether AI companionship leads to healthier people or more isolated ones depends on what builders choose to prioritize.

Agent Mode: ChatGPT’s Next Big Step

Agent Mode gives ChatGPT something new. Instead of answering a single question, it can now take on multi-step tasks using its own browser, document tools, and built-in code interpreter. It can read web pages, click buttons, download files, analyze spreadsheets, write reports, and even build presentations. All of this happens inside a controlled workspace where ChatGPT shows its actions step by step.

In practice, Agent Mode feels like giving ChatGPT your to-do list. You set the objective, and it plans the workflow, pulls data from your files, searches online, and completes each step in sequence. It checks in with you as needed, especially when approvals are required or when actions could impact your data.

However, not everyone agrees Agent Mode is the next logical step. While it can navigate websites, some users have found it struggles with account logins or actions that require secure authentication. It can also take dozens of minutes to perform the same task any of us could complete in 2 or 3.

Early users report that it works best for research, document creation, and process planning. Tasks like generating reports from Google Drive files, comparing spreadsheets, or preparing project summaries are where Agent Mode shows real value.

Behind the scenes, Agent Mode’s browser actions still use a pixel-driven approach that can stall or fail if page layouts shift when new data is presented on a page (like Ads…). We will have to wait for a more advanced version of OpenAI’s Operator tech which can interact with websites using the document object model (DOM) which enables faster, more reliable reading and writing to pages that is resilient to layout changes.

Instead of guessing screen coordinates, a DOM-enabled agent makes requests of the browser, such as “Give me the <button id='pay-now'> element and click it.” And using DOM lets agents pull structured tables, metadata, or hidden form values directly—no need for optical character recognition, making it faster and more accurate when working through complex pages.

The long-term potential is clear. ChatGPT could eventually handle business processes that today require multiple apps and manual oversight.

The real question is, will it.

WHY IT MATTERS

Agent Mode Automates Complex Tasks: ChatGPT can now plan, execute, and deliver results across multi-step workflows.

Users Stay in Control: You can approve key actions, monitor progress, and adjust the workflow as needed.

Research and Reporting Get Easier: It handles document analysis, data gathering, and basic project planning with little human input.

Agents Are Moving Beyond Prompts: This is the first step toward AI systems that work like assistants, not just chatbots.

A New Way to Work Is Emerging: As ChatGPT integrates browsing, file access, and code execution, traditional software tasks start to shift to AI-driven processes.

Are We Training Thinking Machines?

A new wave of models is changing how AI handles reasoning. Early language models answered by predicting likely next words in response to the words given them in the prompt. They followed prompts and could simulate thinking in a response without really doing multi-step conditional analysis, adjusting their “thinking” as they discover intermediate results. Now, some models reason, plan and execute conditional steps automatically.

This shift moves beyond chain-of-thought prompting. New models break down problems and check their own logic before answering. They plan steps and refine their answers iteratively as part of their normal process. They use compute time to anticipate and think through possibilities, not just generate text.

Some say this is just longer prompting. Others call it real reasoning. These models now handle uncertainty and weigh different options before responding. They solve problems like reasoning machines.

This is not identical to human thinking. These models do not reflect on themselves and they do not have senses or experience “aha moments”. But they have learned to work more like problem solvers and less like text predictors.

For businesses, the results matter more than the method. Clients now see AI agents that solve problems without constant detailed prompting. Behind the scenes, what looks like thinking is arguably just more advanced structured processing. The outcome is the same. Better answers delivered faster.

WHY IT MATTERS

Models Now Plan and Reason: AI systems can break down problems and think through solutions without prompt-by-prompt guidance.

Agents Become More Autonomous: Reasoning models allow agents to act like project teammates, handling steps without human micromanagement.

Fewer Mistakes, More Reliable Output: Planning and checking steps help reduce errors and improve output quality.

AI Moves Closer to Problem Solving: Models that reason, rather than guess, open the door to more useful and more trustworthy AI tools.

Language Alone Is No Longer Enough: These models combine logic, process, and knowledge, not just sentence prediction.

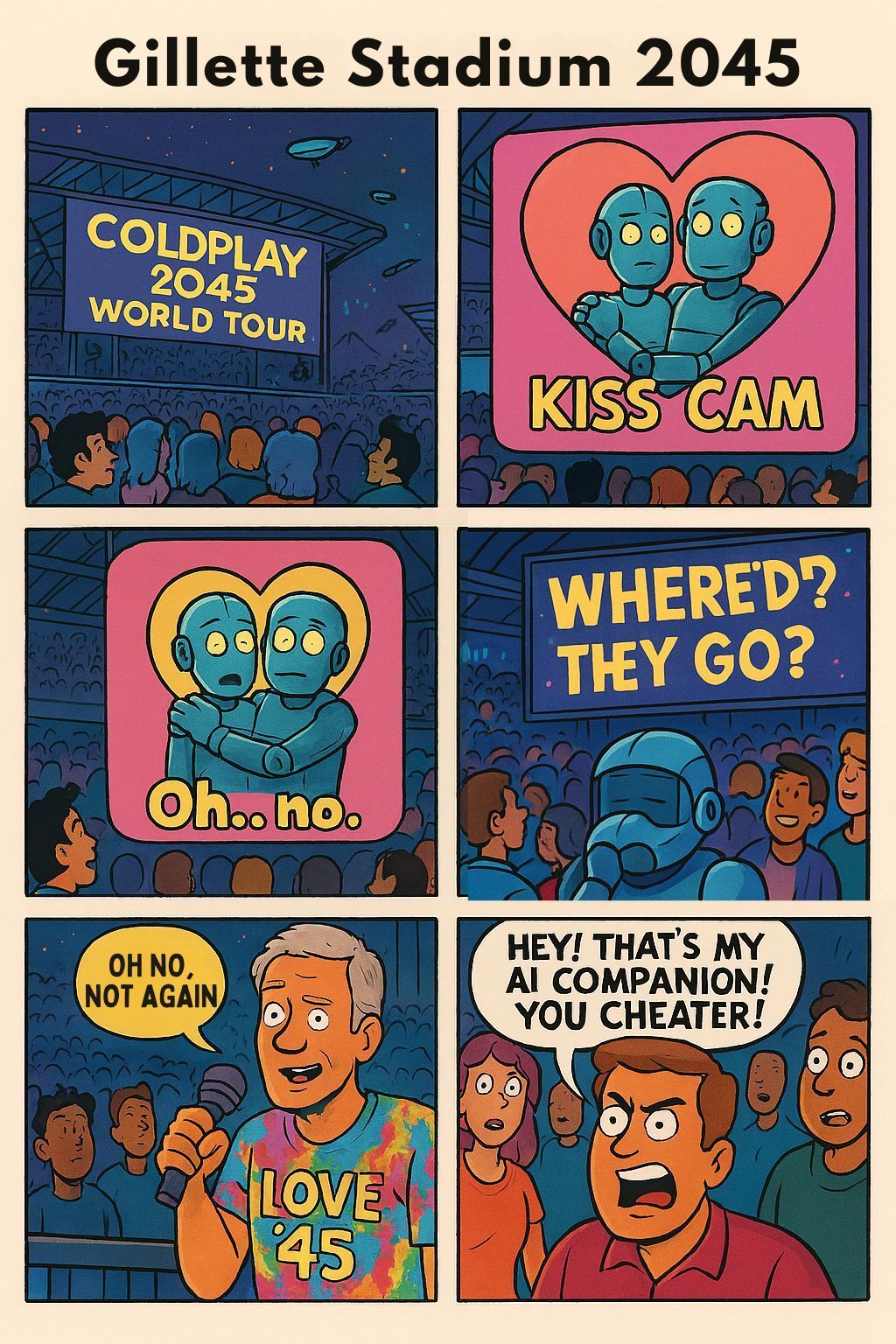

Just Jokes

Did you know?

A new AI tool from Columbia and Columbia‑affiliated researchers can spot structural heart issues, like valve problems and thickened heart walls, from a standard ECG. Traditional ECG interpretation caught about 64% of such conditions, but the AI model, EchoNext, hit 77% accuracy.

Even more impressive, the tool analyzed nearly 85,000 past ECGs and flagged around 3,400 additional patients who likely had undiagnosed issues. EchoNext could help turn routine ECG readings into powerful early‑warning screens for serious heart disease which is expected to be 400 million worldwide this year.

This advancement has the potential to transform primary care by enabling early intervention. Instead of waiting for symptoms, physicians could use AI-enhanced ECGs as a first line of screening for structural heart problems, helping catch conditions earlier and saving lives.

This Week’s Conundrum

A difficult problem or question that doesn't have a clear or easy solution.

The AI Sincerity Conundrum

People have long accepted mass-produced connection. A birthday card signed by a celebrity, a form letter from a company CEO, or a Christmas message from a president. These still carry meaning, even though everyone knows thousands received the same words. The message mattered because it felt chosen, even if not personal.

Now, AI makes personalized mass connection possible. Companies and individuals can send unique, “handwritten” messages in your preferred tone, remembering details only a model can track. To the receiver, it may feel like a thoughtful, one-of-a-kind note. But at scale, sincerity itself starts to blur. Did the words come from the sender’s heart or from their software? And if they remembered and cared at all, is that enough?

The conundrum

If AI lets us send thousands of unique, heartfelt messages that feel personal, does that deepen connection, or hollow it out? Is sincerity about the words received, or the presence of the human who chose to send them?

Want to go deeper on this conundrum?

Listen/watch our AI hosted episode

News That Caught Our Eye

Mistral Releases Voxel Voice Model

Mistral launched Voxel, a new high-quality open-source voice model. Voxel can transcribe or understand up to 40 minutes of audio, marking another step forward in open speech technology.

Deeper Insight:

Mistral’s commitment to releasing accessible models puts more advanced speech tech in the hands of builders and researchers. With strong open-source competition, expect faster improvements and broader experimentation in voice, speech-to-text, and voice agent applications.

Moonshot AI Unveils Kimi 2, a Trillion-Parameter Open Model

Moonshot AI, a leading Chinese company, released Kimi 2, a trillion-parameter mixture-of-experts model that efficiently activates only the most relevant 32 billion parameters per task. Trained with 15 trillion tokens and designed for agentic, multi-step reasoning, Kimi 2 is built for tool use and complex workflows.

Deeper Insight:

Open-source models are matching the leaders on agentic tasks. With Kimi 2, the open AI community has another frontier model that rivals proprietary options, accelerating global innovation and making it harder for closed models to maintain an edge.

Perplexity Launches Comet, Expands Model Access

Perplexity debuted its new model, Comet, and is adding Kimi 2 to its lineup. While some confusion persists about pricing, Comet is currently available for Max subscribers and will eventually be rolling out to a wider audience.

Deeper Insight:

Search and chat platforms are racing to differentiate with exclusive models and features. As more powerful models like Kimi 2 land on mainstream apps, expect a surge in real-world agentic use cases and shifting user expectations.

AI’s Chain-of-Thought Monitoring Gets Industry Backing

A position paper from researchers across OpenAI, Google DeepMind, Anthropic, and others urged the industry to make chain-of-thought monitoring a standard feature in advanced AI. This approach reveals a model’s reasoning steps, making it easier to evaluate and align agentic systems as they become more complex.

Deeper Insight:

The ability to see and interpret an AI’s reasoning will become central to safety, debugging, and regulation. As agentic models gain power, transparent reasoning could be the next battleground for trust in AI.

Meta Shifts Toward Closed AI Models, Builds Massive Data Centers

Meta’s new Superintelligence Labs is considering a move away from open-source AI development. The shift is driven by newly recruited leadership from top AI firms and a sense that open releases have given upstart competitors a head start. Meanwhile, Meta is planning multiple new data centers, including the five-gigawatt Hyperion project and the one-gigawatt Prometheus facility.

Deeper Insight:

The era of “open everything” is fading as AI competition heats up. Owning proprietary models and massive compute may become the new moat, especially as government contracts and security needs drive investment in infrastructure.

All Four Big AI Firms Win $200M U.S. Defense Contracts

The U.S. Department of Defense awarded $200 million each to the four top foundational AI companies to develop new technologies for military and security uses.

Deeper Insight:

AI is now deeply embedded in the military-industrial complex. As these contracts grow, expect foundational models to be shaped by defense priorities, with broader implications for both civil and commercial AI applications.

Anthropic’s Claude Rolls Out More Connectors and Desktop Controls

Claude’s new connectors now let users integrate with popular SaaS apps and control their local files or Mac computers from the desktop app. Users can automate workflows, send emails, generate presentations, and trigger Slack messages, all orchestrated by Claude’s agentic capabilities.

Deeper Insight:

As LLM-based agents become more capable, the lines blur between traditional automation tools and smart digital assistants. Multi-step, cross-app orchestration is quickly moving from hype to hands-on productivity for early adopters.

Runway’s Act Two Expands AI Performance Animation

Runway released Act Two, a major upgrade to its AI performance-driven animation. Beyond face-driven puppetry, Act Two now captures hand gestures, full upper-body motion, and even works with photorealistic animal characters. It’s currently available to enterprise users and will soon roll out to a broader base.

Deeper Insight:

The leap from facial animation to full-body performance brings AI-generated filmmaking closer to true virtual acting. For creators, this means less need for complex motion capture gear and more direct, nuanced storytelling.

Nvidia Cleared to Sell H20 Chips, Stock Hits New High

The U.S. administration approved Nvidia to sell its lower-end H20 AI chips abroad, a move expected to generate $5–15 billion in revenue. Nvidia’s stock jumped to new highs, breaking the $4 trillion valuation mark.

Deeper Insight:

Supply chain shifts and policy changes now move markets overnight. Nvidia’s dominance in AI hardware continues to shape both the direction of model development and the pace of global AI adoption.

AI Startup Investment Hits $168 Billion in 2025

Annual investments in AI startups surged to an estimated $168 billion this year, reflecting ongoing investor confidence and fierce competition in every layer of the stack.

Deeper Insight:

The rush to fund AI bets shows no sign of slowing. With so much capital flowing in, expect even faster cycles of innovation, consolidation, and eventually, creative destruction as the winners emerge.

Amazon Launches Hero.dev, a Free AI Coding IDE

Amazon released Hero.dev, a new integrated development environment aimed at making AI-powered, “vibe” coding more accessible. Built as a fork of VS Code, Hero.dev is free for now and designed to guide developers step by step through building software with AI assistance.

Deeper Insight:

Big tech is betting on AI-native tools to win over the next generation of builders. As coding agents and smart IDEs proliferate, the distinction between “developer” and “power user” continues to blur.

Google Notebook LM Launches Featured Notebooks

Notebook LM added “featured notebooks” built around curated topics such as longevity, parenting, and major research fields, so users can dive into pre-built, authoritative source collections and chat with them for insights or summaries.

Deeper Insight:

Knowledge assistants are evolving into full-scale research companions. Curated notebooks could reshape learning and professional research, giving users instant access to deep, reliable content on demand.

AI Powers Major Advances in Cancer Detection and Prosthetics

AI models are improving breast cancer MRI accuracy by over 40 percent, helping spot tumors that traditional scans might miss. Meanwhile, AI-designed prosthetic hands can now perform 30 grip combinations, are fully waterproof, and can be customized for personality and style. At the AI for Good conference, a user even controlled an F1 car with his mind and an AI interface.

Deeper Insight:

AI breakthroughs in health and assistive tech are moving from labs to real-world impact. Whether diagnosing cancer or restoring function for amputees, these advances show the promise of AI when focused on genuine human needs.

AI-Driven Self-Running Labs Speed Up Materials Discovery

Automated, AI-powered labs for materials research can now run ten times more experiments than traditional setups, rapidly advancing the discovery of new compounds and safer materials.

Deeper Insight:

Self-driving labs highlight how AI is transforming not just digital work, but physical science which is accelerating discovery, reducing waste, and opening the door to innovations that used to take decades.

Startups Struggle With AI Hardware: Halliday Glasses Delay

AI-powered smart glasses from Halliday faced major delays due to underestimated supply chain and manufacturing challenges. The team remains committed to eventual customer satisfaction, and is apologetic about the delayed delivery.

Deeper Insight:

Building new hardware at the edge of AI is still tough. As more companies try to deliver AI-powered wearables, expect setbacks and shakeouts before smart glasses and similar tech go mainstream.

Inside OpenAI’s Organizational Playbook

A new blog from a former OpenAI engineer details how the company relies almost entirely on Slack for internal communication and runs on a startup-style, highly visible leadership structure.

Deeper Insight:

Even the biggest names in AI are trying new models for collaboration and rapid iteration. Startup culture is shaping how the most influential tech companies move at speed.

Did You Miss A Show Last Week?

Enjoy the replays on YouTube or take us with you in podcast form on Apple Podcasts or Spotify.